The Open Source Community Health Analysis Method

February 2, 2014 2 Comments

Built on a developed understanding of open source community (OSC) vitality, this post introduces a systematic approach for software product managers that enables them to evaluate the vitality of candidate open source communities.

The authors find it important to highlight that the primary objective of the following health analysis method is not to present a universally applicable method that is suitable for any type of open source community or any kind of software company. Instead, the focus lies on developing a situational understanding of each case and then to select a number of method fragments that are best suited for the respective situation. Thus the aim of the health analysis method is to provide software product managers with a toolset that enables them to make the best assessment in a variety of scenarios.

Within this post we will introduce the concept and the features of a method fragment pool and method assembly (Harmsen, Brinkkemper, & Oei, 1994). These will be utilized to create situational methods for assessing OSC vitality. Furthermore, we present a selection rationale for choosing and assembling appropriate method fragments. Finally, the post contains a collection of varying fragments that can be used individually, or in combination to perform an OSC vitality assessment, as well as a sub-section for method calibration.

Method Fragment Pool

Derived from the domain of method engineering, this segment introduces the concept of situational method design, as well as that of a method fragment pool, containing base elements for method assembly. While the general principles of situational method engineering (Van De Weerd & Brinkkemper, 2008) fully apply, this section focuses on a customized adoption towards the specific needs of Software Product Managers and their responsibilities in regard to OSC dependency management.

Built upon the work of Harmsen, Brinkkemper and Oei (1994), Figure 9 depicts a process flow aimed at creating a situational method for OSC vitality assessment. Furthermore, the diagram includes an iterative cycle, focused on the customization of method fragments towards a community’s unique characteristics.

The building blocks of a situational method are so called method fragments, which are stored within the method fragment pool. Each element within the pool focuses on assessing one specific OSC vitality aspect and can be either used by itself, or in combination with other fragments. Each fragment is linked to situational factors that make it best suited for assessing either a given community type or determining its applicability for a given Software Product.

Fragment selection begins with an assessment of OSC characteristics, necessary for narrowing down the total number of candidate method fragments for the given case. Combined with the requirements and the resources of the respective Software Product, both form the selection rational for choosing suitable method fragments within the existing fragment pool.

Figure 9 – Situational OSC Vitality Method Design Process

Following the selection process, the chosen fragments are being assembled into a situational method. Although a particular order is not a requirement, a logical structure or segmentation into fragment groups can contribute to the method’s usability. Depending on the relative significance of community aspects, fragment importance can vary. Thus, looking at the overall quality of the software product, individual fragments can receive a greater weighted influence over less significant ones.

After method assembly, the individual fragments and the entire method can undergo an iterative process, aimed at improving the accuracy of the assessed OSC vitality. Hereby, historic or present community data can be used to review fragment and method accuracy. If a given fragment is found to generate inaccurate or contradictory vitality data it can be adjusted, its result weighed less or it can be fully removed from the situational method. All community specific adjustments can then be updated and re-inserted into the fragment pool. Should another software product, with varying project characteristics, require assessing this particular OSC, the new and more accurate fragments can be implemented.

Fragment Categorization and Selection Rationale

The following sub-section introduces a number of criteria that can be used to select situation specific method fragments for a given OS community or software development project. The main reason for this approach is the intention to create a practical heuristic that can be quickly utilized for method assembly. Furthermore, the approach does not enforce the selection of specific fragments, based on the presented rationale, but merely suggests using it as an indicator for assessing fragment suitability. The three introduced criteria have been selected based on their simplicity for the use by software product managers and their universal applicability for varying types of OSCs. Herby, the first two indicators stem from the analyzed scientific literature and focus on specific community characteristics. The third indicator is based on the authors’ estimate for required fragment resources and aims at a software product’s development scope.

Community Size

The most evident indicator for fragment selection is community size. Hereby the size can be measured by the number of OSC developers, the number of core developers or alternatively the total number of registered community members. While this indicator needs to be adjusted for each community’s nature or characteristics, it can help to quickly exclude fragments that are only applicable to large or very small OSC types. Each method fragment is labeled with one or many community size indicators, suggesting the most suitable community sizes.

Figure 10 –Fragment Selection Indicator – Community size

Community Maturity

As shown before, community age is a direct indicator for the probability of its survival and was found to have a significant positive correlation with the maturity of it software development processes and its governance structure. This in return makes it a suitable indicator for selecting applicable method fragment, as it can indicate which fragments are best suited for mature or young OSCs. Alike to the previous indicator, each fragment can have one or multiple labels describing matching OSC criteria.

Figure 11 – Fragment Selection Indicator – Community maturity

Fragment Costs

Looking directly at the perspective of software product managers, disposable time and financial resources can vary greatly with each managed software product. Thus, even if a method fragment for vitality assessment is promising, it yet may not be feasible to use. This can be the case if the given fragment exceeds available project resources or if the overall analysis would benefit from focusing the efforts on less resource intensive fragments. In contrast to the previous indicator, each fragment is labeled with only one cost indicator that expresses the authors’ estimate of the necessary financial or time investment, in order to execute the according method fragment.

Figure 12 – Fragment Selection Indicator – Fragment costs

Situational Method Fragments

By combining selection rationale and vitality indicators, this sub-section presents a number of OSC health method fragments that can be used individually or in combination by software product managers. Within the following sub sections, we present five fragment categories, each aimed at different, identified vitality indicators.

In regard to fragment completeness, It is noteworthy that three vitality indicators have not been included in the fragment pool. OSC license utilization, user interest and bug report frequency have been deliberately excluded from the method base. It is the authors’ belief that these areas are either not relevant for software product managers or have been reviewed with contradictory results in scientific literature. As an example, OSCs with commercially not suitable licenses are unlikely to be considered for integration by software product managers.

Furthermore, the author would like to highlight that this method does not attempt to specifically dictate how to extract or quantify data but presents a guideline that can and should be adjusted to particular characteristics of each analyzed OSC.

Common Method Base

All OSC Health Analysis Methods contain two base fragments that act as a foundation for the entire vitality assessment. Figure 13 depicts the first fragment that focuses on data availability, as well as the second fragment that serves the purpose of establishing a base performance index for interval based method fragments.

Figure 13 – OSC Health Method: Common method base

Both fragments can be seen as a prerequisite for structured data analysis and are thus not labeled with a selection rationale. Furthermore, the fragments should be seen as a mere guidance for adjusting the freely selected OSC fragments to a community’s accessible data, as well as for standardizing data operationalization in order to achieve consistent and reproducible results across all index based fragments. This is achieved by calibrating each interval variable based on one common starting date and standardized base points. Alike to established common practices in stock market indices, this approach enables detailed performance tracking of each vitality variable over time. Furthermore, it enables the use of aggregate constructs for certain vitality aspects or for the entire OSC vitality.

Performance Index based Method Fragments

Looking at distinctly quantifiable vitality indicators, this sub-section introduces a number of method fragments that can be used to generate interval based vitality indices.

The first fragment, depicted in Figure 14, aims at external OSC metrics and is most suitable for measuring outward facing OSC activities. This includes actions, such as extracting the number of OSC downloads over time, tracking online search popularity or monitoring release frequency. All activities produce individual results, as well as an aggregate index, representing the overall metrics performance.

The fragment is suitable for a broad range of OSCs with varying degrees of maturity and size. However, due to the necessity to establish and track changes to the indices, the fragment is relatively expensive and has the second highest cost rating

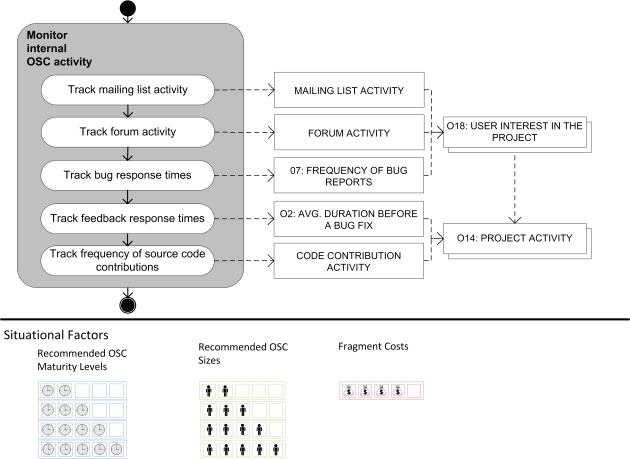

Figure 14- OSC Health Method Fragment: Monitor external metrics

In contrast to Figure 14, the following fragment (Figure 15) measures internal OSC activity and attempts to accurately depict internal community processes. Hereby, the fragment measures OSC aspects, such as bug or feedback response times, mailing list or forum activities and source code contributions. Analogue to the external metrics, each activity of this fragment produces a vitality index for the respective variable, which in return are aggregated into an internal OSC performance index. Due to their nature, this fragment is labeled with an identical selection rational to the one in Figure 14.

It is noteworthy that the indicated fragment costs for Figure 14 and Figure 15 are based on pessimistic assumptions regarding data availability. Initial experiments with OSC vitality analysis have shown that even among popular and well established communities, broad data availability should not be considered a given.

Figure 15- OSC Health Method Fragment: Monitor Internal community activity

The final index driven method fragment serves the purpose of correcting the generated indices for forms of cyclical community activity. It can correct too low or too high activity levels by taking a community’s natural activity cycle into account and accordingly adjusting the individual and aggregate indices. Due to the necessity for historic data, as well as one for mature OSC processes, this fragment is only suited for medium to high OSC maturity levels. However, given that the indices solely need adjusting and no new data operationalization is necessary; this fragment is less expensive than its two predecessors.

Figure 16 – OSC Health Method Fragment: Activity cycles

Scale Based Method Fragments

After having introduced the interval based vitality fragments; this section presents a number of ordinal scale Health Method fragments, based on vitality indicators that cannot be represented by means of a distinct unit scale. Instead, the indicators rely on a number of likert scales that are individually designed and adjusted for each fragment. Although this approach does not support a detailed monitoring of distinct variable changes, it allows for the tracking of proven indicators, which are otherwise difficult to operationalize, thus broadening the OSC analysis.

Figure 17- OSC Health Method Fragment: Source Code quality

Figure 17 evaluates OSC vitality from the perspective of code quality. After creating a definition of quality for the OSC in question, the method establishes a likert scale that indicates improvements or decline of code quality over time. Once reviewed, the results are being documented and the current state of the code quality rated. Given that code reviews are seen as good practice when integrating software components, the fragment is suitable for communities of virtually all sizes and degrees of maturity. However, it is noteworthy that manual code reviews are also very expensive, as considerable resources need to be invested, typically at the expense of development efforts.

Alike to code quality, Figure 19 depicts a method fragment aimed at evaluating the state of OSC project documentation. While only suited for medium to very mature OSCs, this approach is less resource intensive than the manual code review. After establishing a suitable scale for documentation quality, the results can be rated and re-evaluated at different points in time. Documentation quality was mentioned by a majority of the interviewed open source experts as a quick indicator for well-practiced community governance. Furthermore, it serves as an indicator for the necessary time investment when integrating an OSC.

Figure 18- OSC Health Method Fragment: Core developer reputation

The purpose of the final two scale driven method fragments is to review OSC core developer reputation and motivation.

Analogue to the previous approaches, the fragment in Figure 18 first establishes a reputation ranking for core developers and then progresses to developer identification and conducting interviews with domain experts. Both steps are necessary for establishing a reputation ranking. As core developers play an important role in most OSCs, this fragment is suited for a broad scope of maturity levels, however becomes less significant for large and very large communities, as other vitality factors, such as community governance or a growing member base gain in significance. Due to its qualitative nature and the typically small number of core developers, this fragment only requires a medium time investment to be executed.

Figure 19- OSC Health Method Fragment: Documentation quality

The final fragment within this category (Figure 20) reviews one of the main drivers for OSC vitality: Core developer motivation. After clearly identifying core developers within a project’s source code repositories or documentation, the fragment proposes the design of a core developer questionnaire and a matching motivation scale. Based on this, identified candidates can be interviewed and their motivation to contribute to the project tracked over time. As this approach is similar to one within the developer reputation fragment, the recommended OSC maturity and age indicators are identical. Hereby, the fragment costs are directly linked to the core developers’ interest in cooperating with the product manager and should be reviewed in light of previous cooperation attempts.

Figure 20 – OSC Health Method Fragment: Core Developer motivation

Software Development Practices Checklist

In contrast to the index or likert scale based method fragments, this subsection introduces a fragment that purely records the binary state of a number of vitality indicators within OSC software development practices. Frequently mentioned by both, the reviewed scientific literature and the interviewed OS experts, the software development processes within OSCs are a strong indicator for code quality and community vitality. Suitable for all, but the least mature and youngest communities, this fragment can quickly and without great time investment depict a community’s stability. Additionally, communities that improve their development practices are also likely to grow in maturity and thus increase their probability of survival.

Figure 21- OSC Health Method Fragment: Software development practices

Alignment and Resource Optimization with Other OSC Stakeholders

The final fragment of the introduced fragment pool aims at reducing OSC monitoring costs by aligning business interests and dividing monitoring efforts among all commercial stakeholders sharing an interest in an OSC. Figure 22 illustrates an approach that systematically searches for fellow commercial stakeholders within a given OSC and attempts to identify mutually beneficial opportunities for all involved parties. Given the fact that open source is equally available to all software integrators, the authors assume that often identified commercial parties don’t engage in direct competition over access to the OSC but are interested in its vitality and software quality. Thus, sharing monitoring costs can significantly contribute to reducing individual project manager workloads. Alternatively, such an approach can lead to a more accurate vitality analysis, as the increased cumulative resources allow for using a larger number of method fragments. Based on the fragment’s nature, the authors find it suitable for all community sizes and maturity levels. Furthermore, given the fragment benefits, the resource investment is marginal and only involves search costs for identifying other stake holders and time investment for initial discussions.

Figure 22 – OSC Health Method Fragment: Align goals with existing commercial stakeholders

Method Assembly and Calibration

Depending on fragment selection, the final situational method can contain any combination of index or scale based elements, as well as binary results. Additionally, two fragments with the purpose of either correcting activity indexes for cyclical trends or for optimizing OSC monitoring efforts can be included.

The only fragment required for method assembly is the method base. Consequently, any number or combination of fragments can be utilized within the situational method. Based on the chosen selection rationale, software product managers can group fragments and are free to either aggregate method results or compare them on an individual basis.

Specifically looking at index driven methods and the produced performance indices, they can be aggregated to create a unified OSC performance index. Next to the overall OSC health, individual vitality indicators can be monitored separately in order to identify trends within vitality areas. Furthermore, the approach supports index adjustment by correcting the individual weight of vitality indices according to their importance for a given OSC. Thus, a situational method cannot just be tuned by means of fragment selection but also by means of adjusting fragments to local OSC realities.

Method artifacts generated by non-index fragments lack a distinct scale; however they can be used to register changes in vitality. By individually tracking variations in the likert ratings, each method fragment can be used as an indicator for changes in OSC health. The same applies to the specific case of monitoring software development practices. Despite the binary nature of the results, each can be compared to past practices of the OSC. Thus, added practices can be seen as an improvement to community health or code quality, while the abandonment of practices can be seen as a decline in community performance.

Although not necessary, fragment grouping can contribute to the ease of use of the method and provides it with a clear structure. For illustrative purposes, all presented method fragments have been assembled into a single situational method, covering all vitality aspects introduced within this thesis.

Method Application

In order to present a practical example of the method’s application, a representative OSC was selected for data analysis. Given the Health Analysis Method’s situational nature and the great diversity among OSCs, the main reason for choosing a single OSC was to introduce a set of steps that can be taken in order to apply the introduced method fragments to a realistic assessment scenario. Furthermore, the aim was to present an analysis of a broadly known OSC and thus make the example as relatable as possible for project managers, familiarizing themselves with the new method.

OSC selection was performed based on the popularity index of one of the most widespread public source code repositories, namely Github. The platform hosts a vast amount of open source projects and provides additional features, such as social components, project collaboration tools, issue tracking or code reviews. Furthermore, Github is built around the distributed revision control system Git that provides an additional source of data. Due to Git’s fully distributed architecture, revision data can be directly extracted from every cloned copy of a repository.

Among the highest rated community projects on Github, the jQuery JavaScript library was selected for analysis. Although ranked third in the index (8. May 2013), the first two projects Twitter Bootstrap and Node.js were excluded due to either their direct ties to a commercial entity or the scope of available data and community maturity. An additional reasons for excluding the two most popular projects was the authors’ intentions to choose a typical OSC that had achieved a sufficient degree of maturity and had been exposed to a minimal degree of external influences affecting its development.

The selected OSC is a widely popular JavaScript library first introduced in 2006 by John Resing, a former developer at the Mozilla Foundation. The community’s age, its data availability and its established mature development practices make the community ideal for the exemplified OSC vitality analysis.

The analysis within the following sections is based on community data available on Github, the jQery Git repository, Ohloh (a public directory of open source software owned by Black Duck Software, Inc. ), the GHTorrent project, as well as community pages, blog entries, the mailing list and similar documentation of the jQery project. The jQery Git repository was mined with the open source tool GitStats[1].

The following subsections contain illustrative data that can be used for OSC vitality analysis of the representative OSC. Hereby, the aspect of data availability will be discussed within the respective method sections.

Index based analysis

Looking at external OSC metrics, the relative number of OSC committers, community online popularity and release frequency can be derived from publicly available sources and are thus used for establishing a base performance index in the respective categories.

Figure 23 depicts the number of active contributors per month and thus illustrates changes in commit activity over time. Furthermore, the graph highlights the activity peaks around each major release (see Table 7) and shows the overall growth in activity when the project transitioned to a major release (v. 1.5) in 2010.

Figure 23 – Sample OSC: Number of unique contributors per month

Although the most recent Github API does offer the opportunity to extract the overall number of downloads, this data attribute is not tracked over time and does not take downloads from other mirrors into account. Unfortunately, the jQery foundation does not provide an aggregate overview of the total number of downloads for historic releases. Thus, this vitality indicator will not be included in this analysis.

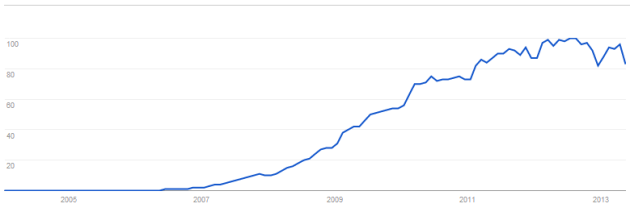

Figure 24 – Sample OSC: Online popularity in Google trending topics

A direct approach for assessing an OSC’s online popularity is Google Trends. The tool depicts the overall trend for queries mentioning a particular word or topic. While the use of Google trends can be seen as problematic or potentially inaccurate with keywords that can also be found within unrelated expressions or sentences, this risk is unlikely to be relevant for the unique name of jQery. However, it is noteworthy that a trend can be positive, as well as negative. Thus, a community that has received negative publicity or bad reviews could also appear as a positive search trend. Figure 24 depicts the trend performance for jQery. Hereby, the y- scale represents percent values with 100% set at the highest frequency of occurring searches.

The final vitality indicator within the external OSC metrics fragment is release frequency. Such information is available through a number of sources incl. Github or OS project trackers. For this analysis the data was directly extracted from the project’s community blog. Table 7 depicts a list of all major software releases, as well as the elapsed time in month between each release.

|

Version number |

Release date |

Elapsed time in months after since release |

|

2.0.0 |

18. April, 2013 |

2 months |

|

1.9.1 |

04. February, 2013 |

1 month |

|

1.9.0 |

15. January, 2013 |

6 months |

|

1.8.0 |

09. August, 2012 |

9 months |

|

1.7 |

03. November, 2011 |

6 months |

|

1.6 |

03. May, 2011 |

4 months |

|

1.5 |

31. January, 2011 |

12 months |

|

1.4 |

14. January, 2010 |

12 months |

|

1.3 |

14. January, 2009 |

4 months |

|

1.2 |

10. September, 2007 |

8 months |

|

1.1 |

14. January, 2007 |

5 months |

|

1.0 |

26. August, 2006 |

Table 7 – Sample OSC: Release frequency

As suggested by the method fragment, all measured indices are aggregated into one external performance index (Figure 25). For this purpose, each metric has been first recorded with the according activity for each month between Aug. 07 and Apr. 13. Due to the different measuring units, the performance was adjusted to changes in percent, relative to a standardized base value. The index for the number of active contributors per month is operationalized by changes in percent, compared to an average of 8 contributors per month. This value is based on the actual project average for its entire lifespan and has been rounded to an integer value. Release frequency is recorded in relative frequency compared to a release frequency of 2 releases per year or 0.16 releases per month. As online popularity is already measured in percent values, relative to an established base value, the index has been included in the analysis as is.

It is noteworthy, that such an approach is only suitable for a one-time analysis, as the 100 % mark of the popularity index is always adjusted to the number most frequently occurring searches in a community’s history. Thus, should an OSC reach a new record in search frequency, historic data must be adjusted in order to maintain index consistency. Furthermore, the index is only designed to indicate trends and therefore uses average community performance for activity comparison. Although, minimum activity can also be used as a reference point for indicator performance, this was avoided in order to prevent a too striking upward trend on the graph that would make long term-trends less observable.

In addition to the regular aggregate index, two additional indices were added in order to illustrate the impact of weight adjustment of individual variables. The second index highlights the effects of a doubled weighting of the importance of the number of active contributors. The last index illustrates the effect of a reduced (half the influence) weight of online search popularity. All occurring spikes are either linked to activity related to a new version release (see Table 7) or a significant increase in release frequency. Especially the latter is noticeable for the time period of Oct. 12 and Feb. 13. Both spikes are primarily caused by a drastic change in release frequency to one and two months respectively. These releases occurred significantly more frequent than the average of only two new versions per year.

Figure 25 – Sample OSC: External performance index

In contrast to the external metrics, data availability for internal OSC performance indicators is limited. One of the reasons for the lack of consistent data is the age of the community. The OSC has undergone a number of hosting transitions for e.g. its forums and unlike the data available via Git repositories, does not provide consistent long term records. Furthermore, the community lacks a dedicated mailing list and often refers support questions to other platforms, such as the stackoverflow [2] community, which covers a large number of jQuery related support discussions.

However, the jQuery foundation does list short term activity data for forum activity and ticket based issue tracking for a time span of the last week or month. Therefore, suitable data could be accumulated by means of long term data mining.

The only vitality indicator covering the entire OSC life span, selected for evaluation, is the number of code contributions. Hereby, the indicator serves two purposes by both, highlighting the number and frequency of commits, as well as visualizing OSC activity cycles over a time span of 6 years (see Figure 26).

The figure highlights a seasonally cyclical activity pattern with peaks towards the end and the beginning of fife out of six years and low activity levels towards the summer periods. The only exception to the pattern occurred in summer 2012 when the community released a new library version outside its typical pattern.

Figure 26 – Sample OSC: Activity cycle in commits per month

By combining the external performance indicators with the available internal data for commit frequency, an aggregate index can be generated that incorporates all long term community activity into a single Index. Figure 27 depicts a unified index that combines the external vitality indicator of Figure 25 with the OSC commit frequency introduced in Figure 26. Hereby, the commit frequency is expressed in a relative percent value based on an average commit frequency of 60 commits per month. Furthermore, all of the aggregated variables have been included with equal relative weight, as the internal OSC vitality indicators are only represented by one variable. Otherwise, commit frequency would have an unproportional influence on the aggregate index. In addition to the ordinary aggregate index, a second index that was corrected for peak activities in winter periods and low activities during summer time was added. The activity corrections apply to three winter and summer months, which have been reduced or increased by 20% in significance respectively.

Figure 27 – Sample OSC: Aggregated performance Index and linear trend lines

It is noteworthy that when evaluating the graph, measurement consistency is most important while exact performance values lack significance. All index activity values are measured in relation to an established base value and could indicate stronger or weaker growth if the base is modified.

Questionnaires and Likert scales

Following the primarily data driven analysis of OSC activity, this section presents a more qualitative view on the sample OSC’s practices and covers aspects of code and document quality, as well as core developer reputation. Given the illustrative nature of this example no community members were interviewed for OSC vitality assessment. Instead, the purpose of this sub-section is to introduce a number of techniques and likert scales that illustrate a possible application of the OSC Health Analysis method.

The first fragment within this category deals with OS code quality and requires pre-defined quality criteria in order to rate the component in question. While code quality ratings can be seen as controversial and heavily depend on the programming language, the reviewers’ preferences or the application’s purpose, generally acceptable criteria include the architecture of the application, DRY principles (don’t repeat yourself), component modularization, coding practices for e.g. documentation or the use of best case software patterns.

In order to allow for simple comparison of changes in quality, a five level likert scale can be utilized. In the case of the Sample OSC, the scale can be ranked as follows:

- Highly structured and optimized code

- Well-structured code with little repetition

- Moderately structured code without best practices

- Manageable code structure with a number of complex dependencies

- Low code quality with applying bad practices

Although a code review by a development team can bring valuable insights into a community’s suitability for a given project, it is a highly time consuming and expensive approach. Alternative sources for OSC quality assessment can be aggregated online reviews, written by OSC users on platforms, such as ohloh.net. Due to the great popularity of the sample OSC, the library had received a total of 799 reviews (state 17. May), rating it with an average of 4.75 out of 5 stars. Applied to the above scale, this rating would rank the OSC’s code as highly structured and optimized.

Analogue to code quality, the purpose of the documentation quality fragment is to create a likert scale that describes the state of an OSC’s documentation and consequently to rate its performance over time. In contrast to code quality, documentation quality is less expensive to evaluate and requires little content specific expertise. Hereby, quality influencing factors can be the degree of detail, the presence of code examples, update frequency or ease of use and accessibility. A sample scale for documentation quality can be structured as follows:

- Very well-structured, exhaustive and up to date documentation

- Well-structured, exhaustive and mostly up to date documentation

- Mostly well-structured but partially outdated documentation

- Only partially structured or outdated documentation

- Badly or unstructured documentation

In case of the example OSC, the authors rate the community with the highest quality level. It provides both, a very well-structured documentation that is fully up-to date, as well as an additional dedicated learning center, designed to quickly familiarize new developers with the library’s capabilities, as well as for introducing examples of new code features.

The third method fragment within the category deals with core developer reputation. Starting with a general ranking, the following scale can be used for assessing top OSC developers:

- Widely recognized expert known beyond his/her domain of expertise

- Recognized domain expert

- Developer with acknowledged expertise (within the OSC)

- Active contributor to the OSC

- Less known developer

An exemplary approach for core developer identification is Git repository data mining. Indicators, such as the number or share of repository commits can be used as an approximation for the significance of a developer within the community. An analysis of the sample OSC revealed the ranking depicted in Table 8. Evidently, the most contributions to the OSC have been made by John Resig. Resig is widely considered the founder of the community and enjoys broad recognition within a number of technical domains, related to web technologies. Furthermore, Resig is a familiar actor within the broader open source community and counts the Mozilla Corporation as a former employer. Based on these observations, it is the authors’ belief that he can be considered for the highest rating within the above scale. Although less known than Resig, other top contributors within the community enjoy a solid reputation and can be ranked within the second or third categories of the above likert scale.

|

Name |

Number of commits (% of total contributions) |

Number of lines of code added |

Number of lines of code removed |

|

John Resig |

1714 (32.99%) |

106571 |

92494 |

|

Dave Methvin |

478 (9.20%) |

6851 |

7260 |

|

Timmy Willison |

404 (7.78%) |

6034 |

4857 |

|

Julian Aubourg |

330 (6.35%) |

17875 |

15188 |

|

Jörn Zaefferer |

327 (6.29%) |

22681 |

21149 |

|

Rick Waldron |

308 (5.93%) |

6734 |

12142 |

|

Brandon Aaron |

250 (4.81%) |

11102 |

5408 |

|

Ariel Flesler |

200 (3.85%) |

18053 |

3317 |

|

Richard Gibson |

138 (2.66%) |

5401 |

4751 |

|

Oleg Gaidarenko |

104 (2.00%) |

1927 |

1387 |

Table 8 – Sample OSC: Core developers ranked my number of commits

The final proposed method fragment of this sub-section deals with core developer motivation. It suggests to identify community leaders, design a questionnaire to assess their motivation for contributing to the community and consequently perform interviews to acquire the necessary data. The approach for core developer identification overlaps with the activities of the core developer reputation review and thus does not need to be repeated at this step. The results with the leading OSC contributors are depicted in Table 8.

Given a five-stage likert scale, ranking from “does not apply” to “fully applies”, a set of suitable interview question can be introduced, asking to what degree the following statements apply:

- I am motivated to contribute to the community.

- I have sufficient time to participate in community activities and to invest development time.

- I perceive the contribution to the community as rewarding and feel appreciated

- I feel that the community is moving forward in a good direction.

- I feel that I am being supported by fellow OSC developers.

- I feel that I am being supported by all community members.

- I feel that the created software is valuable by the community.

- I feel that my participation in the community is improving my professional skills.

Hereby, the purpose of the above questions is to assess the core developer’s motivation to further lead the project. Ideally the measurement should be repeated over time in order to identify on positive or negative trends within the core team. The results and implications for the entire OSC can be reviewed in light of the dependencies of the community interaction model, introduced in section 4.02.

Community practices

Given the age and acquired level of maturity, the sample OSC follows all of the suggested software development practices. In particular a number of development processes have been consistently standardized across jQery core, as well as other jQuery projects, such as jQery UI or jQery Mobile. An overview of the evaluated activities is depicted in Table 9.

|

OSC Development Practice |

Presence |

| Defined Release Schedule | yes |

| Systematic Testing Methods | yes |

| Formal Requirements Management | yes |

| Standardized Software Development Processes | yes |

Table 9 – Sample OSC: Software development practices in place

Suitable partners for business alignment

The final fragment of the OSC Health Analysis Method does not deal directly with vitality assessment but with business alignment with other stakeholder, sharing an interest in the same OSC. The purpose of the associated activities is to optimize resource allocation for vitality assessment, which can either be used to reduce monitoring costs or to increase measurement accuracy or scope.

Given the domain of the sample OSC, the primary target groups for monitoring alignment are software developers with a focus on web development solutions. This can include providers of content management systems, web-framework, as well as front-end developers. Alternatively, smaller organizations that strongly depend on the library can also be considered suitable partners for shared monitoring activities.

In the case of the sample OSC, it is particularly easy to find suitable candidate organizations. The jQery foundation, which is the umbrella organization for jQuery core and other jQuery based OS projects, publicly lists corporate foundation members. Among the listed gold, silver and bronze partners a number of non-profit, as well as commercial organizations can be found. All of them can be considered strong supporters of jQuery.

This is really good stuff. I’m going to use it in my evaluation of container/Docker related projects.

Hey Lawrence, happy to see interest in my topic. Good luck with your research!